Thanks for all the research you do! I wince whenever I have to do something with the ICertView interface; it’s so archaic. I hope whenever Microsoft finally replaces the ADCS product, there will be native .NET libraries to perform queries and such.

ADCS Certification Authority Database query numbers

Hello everyone, I’m back again and today I want to share some thoughts on retrieving massive results from ADCS Certification Authority database.

Expand Your PKI Visibility

Discover why seeing is securing with revolutionary PKI monitoring and alerting.

Learn More About PKI Spotlight®Point of interest

As a part of my ongoing project I had to collect database statistics (simply, number of revoked, issued, pending, failed, denied requests) and my concern was query performance on relatively large databases. The plan was simple, enumerate entire Request table and collect numbers based on Request Disposition code.

Problem background

ADCS Certification Authority uses JET/ESE database to store its data. JET database is compact and enough efficient for single application purposes, it doesn’t require complex infrastructure to run comparing to Microsoft SQL Server. The biggest downside of JET is the lack of query engine, so database clients rely on DB owner-provided query engine. In the case of ADCS Certification Authority, query engine is implemented in ICertView COM interface. This interface provides very basic capabilities, such as:

- Filtering – filtering is done based on a column value. Multiple filters are combined only using ‘AND’ operator. There is no support for ‘OR’ operator to combine filters

- Ordering – ascendant or descendant.

- Projection – you can specify column set to return

- Paging – paging is implemented only via IEnumCERTVIEWROW::Skip method

That’s all. ICertView lacks many features that SQL provide. For example, count() function that greatly helps in collecting statistics from table. This function is part of most paging operations: determine how many pages we can query, determine if there is next/previous page. Another limitation associated with paging – the lack of FETCH clause. There is OFFSET clause, but not FETCH. ICertView lacks any kind of groupings, joins and other useful stuff we find in SQL. We have to deal with very basic implemented API. In this post, I will focus only on paging functionality.

Test case

I have a CA database with 1million rows in Request table. I did CA dump performance tests after inserting every 100k rows. The plan was as this:

- Insert 100k rows

- Backup CA database to replay and truncate log files

- Restart CA service

- Collect statistics by querying only two columns: RequestID and Disposition

- Page Size: 100k rows. It is ok to store 100k rows in memory with just two integral columns.

- Repeat step 1 until we hit 1mil rows. Total rows in test is 1000698 (a bit above 1mil).

the code for test was as follows:

Int32 pageSize = 100000; Int32 page = 0; Int32 rowsTaken; Int32 totalRows = 0; // outer do-while to loop over pages do { ICertView certView = new CCertViewClass(); certView.OpenConnection(configString); certView.SetResultColumnCount(2); Int32 reqIDindex = certView.GetColumnIndex(0, "RequestID"); certView.SetResultColumn(reqIDindex); Int32 dispIDindex = certView.GetColumnIndex(0, "Disposition"); certView.SetResultColumn(dispIDindex); rowsTaken = 0; IEnumCERTVIEWROW dbRow = certView.OpenView(); // move to current page by skipping rows outside of current page dbRow.Skip(page * pageSize); // start reading current page. 'FETCH' clause is implemented in a second condition // so we read no more than 'pageSize' rows while (dbRow.Next() != -1 && rowsTaken < pageSize) { totalRows++; rowsTaken++; IEnumCERTVIEWCOLUMN dbCol = dbRow.EnumCertViewColumn(); // loop over columns while (dbCol.Next() != -1) { String colName = dbCol.GetName(); Object colValue = dbCol.GetValue(0); // do whatever you need with columns } } Marshal.ReleaseComObject(dbRow); Marshal.ReleaseComObject(certView); page++; GC.Collect(); } while (pageSize == rowsTaken);

I’ve added stop watches at important places. In addition, I did tests with my intelligent .NET wrapper over ICertView interface to compare how much overhead it adds.

Test results

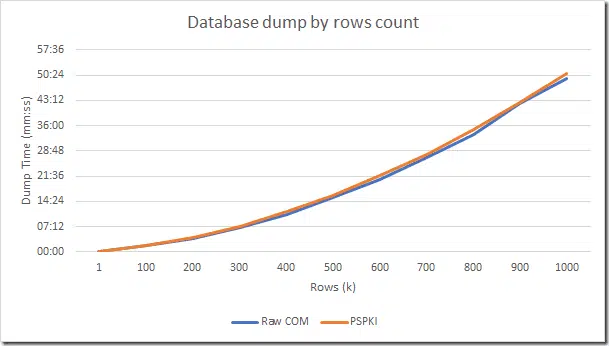

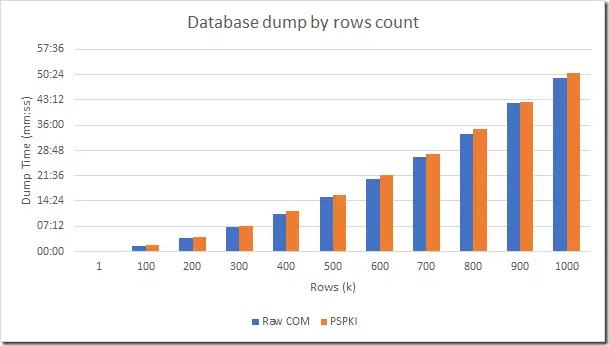

Time required to dump entire database depending on its size is shown in a diagram below:

p.s. second chart compares raw COM access with minumum logic, error handling and my own wrapper of DB reader (part of PSPKI) with advanced logic and error handling. The difference is less than 5%, so I consider PSPKI database reader very effective and fast enough.

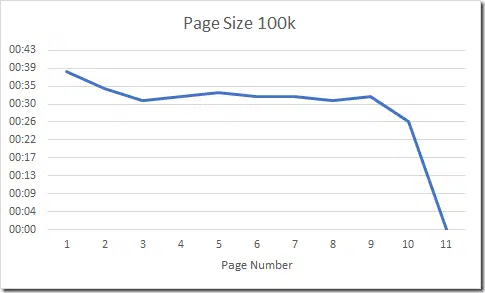

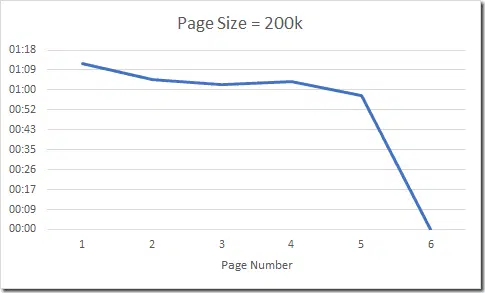

Results were upsetting. Time growth wasn’t linear as I would expect (i.e. 1mil rows should take ~10x time required to query 100k rows, or be nO(n)). However, I observed non-linear time growth. It requires about 50sec to read 100k rows and 1mil took 50min. So actual time increase is 60x than expected 10x. This is certainly terrible. Spending nearly 1hr to dump 1mil big database is not acceptable. I dig deeper and analyzed every page performance in final database (1mil+ rows) and here are results:

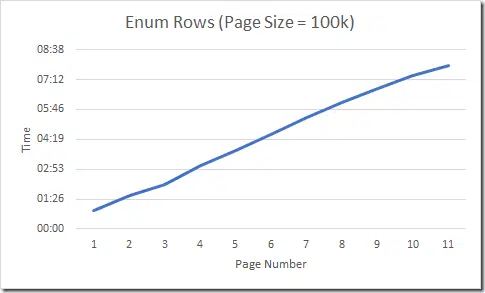

and here is the answer. Every next page takes nearly twice more time than previous. Interesting, that last (11th) page contained only 698 rows (excess above 1mil) and it took 7min 52 sec to read these 698 rows.

These numbers led me to conclude that paging functionality in ADCS is terrible and should not be used when reading more than 1 page. But how would you do paging with nO(n) time? It was evident that first page retrieval was fastest. One approach to tweak the performance would be reading page from the beginning of the table and then read page from the end of the table. Shift pages and continue until you hit the middle of the table. This will improve results, but still way too far from nO(n).

Then I tweaked the code by avoiding ICertView paging functionality as follows:

- order table in ascending or descending

- sore last RequestID number you read in previous page (0 for the first page)

- apply restriction to read rows only above last remembered RequestID value

- read only one page

- repeat the loop until read entire database

Here is the updated code:

Int32 pageSize = 100000; Int32 totalRows = 0; Int32 rowsTaken; Int32 lastIndex = 0; do { ICertView certView = new CCertViewClass(); certView.OpenConnection(configString); certView.SetResultColumnCount(2); Int32 reqIDindex = certView.GetColumnIndex(0, "RequestID"); certView.SetResultColumn(reqIDindex); Int32 dispIDindex = certView.GetColumnIndex(0, "Disposition"); certView.SetResultColumn(dispIDindex); // read only rows with RequestID > last RequestID in previous page. 0 for first page certView.SetRestriction(reqIDindex, 0x10, 1, lastIndex); rowsTaken = 0; IEnumCERTVIEWROW dbRow = certView.OpenView(); while (dbRow.Next() != -1 && rowsTaken < pageSize) { totalRows++; rowsTaken++; IEnumCERTVIEWCOLUMN dbCol = dbRow.EnumCertViewColumn(); while (dbCol.Next() != -1) { String colName = dbCol.GetName(); Object colValue = dbCol.GetValue(0); // write RequestID to a variable for paging purposes if (colName == "RequestID") { lastIndex = (Int32)colValue; } } } Marshal.ReleaseComObject(dbRow); Marshal.ReleaseComObject(certView); dbRow = null; certView = null; GC.Collect(); } while (pageSize == rowsTaken);

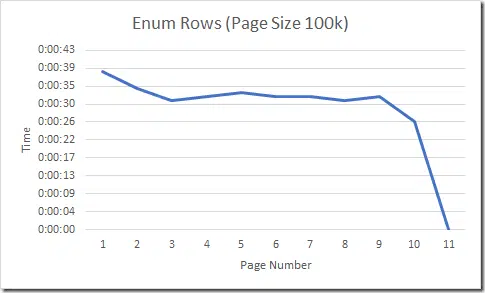

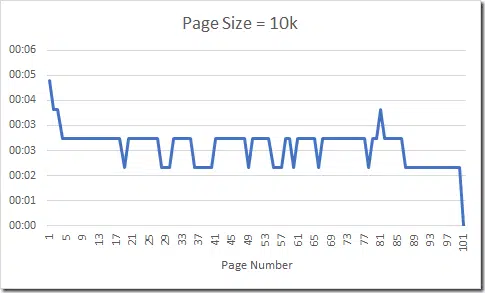

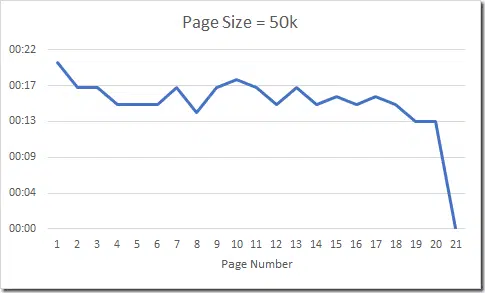

And here are results:

Now this looks more like nO(n)! And you can see that last page took no time since it required to read only 698 rows (rather than 100k). Using this code, I was able to collect statistics in just 5min 40sec. Comparing with 50mins when using ICertView paging capabilities.

Tweaking page size

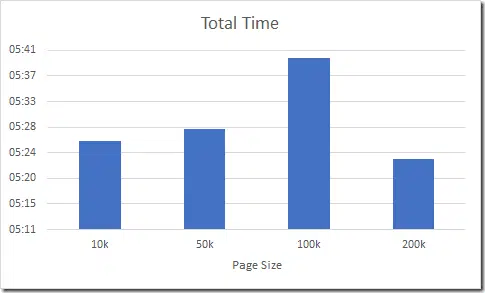

Normally, you would set page size as small as you need and as big as you can afford and you have to find a right balance between your capabilities and page size when you need to read large sets. In my given case, I need to read entire database. I tried different page size to figure out which one is optimal: 10k, 50k, 100k, 200k. And here are results:

for every page size average value was around 5min and 30sec. We see a spike in 100k, but it is not averaged result, so in multiple runs this will average to 5:30min. This is the best time I was able to achieve while reading 1mil rows from Request table using available APIs. Since all page sizes resulted in almost same time, there is no definitive recommendation on what you should use when reading entire database and it depends on your hardware capabilities. But a value between 50k-100k is good.

PSPKI sample code

the code I used was in C#. If you use PowerShell PKI module, then you would go with this solution to dump entire database:

$CA = Connect-CertificationAuthority $PageSize = 50000 $LastID= 0 do { $ReadRows = 0 $CA | Get-IssuedRequest -Filter "RequestID -gt $LastID" -Page 1 -PageSize $PageSize | %{ $ReadRows++ $LastID = $_.Properties["RequestID"] # do whatever you need with row item in $_ } } while ($ReadRows -eq $PageSize)

Related Resources

Comments

-

-

I wouldn’t expect any improvements in this field. Backward compatibility will overweight this. ADCS will retire sooner than we get .NET libraries for this product.

-

-

Very well done Vadims!

-

Nice, so can we migrate the database to MS SQL Server? And how, do you have example? Thank you.

-

Microsoft ADCS does not support MSSQL, so there is no migration path.

-

Schedule a Demo

Schedule a Demo