Creating Highly Available CDP and AIA Locations with Azure, Part 4

Hello everyone, this is the fourth blog post in my “Creating Highly Available CDP and AIA Locations with Azure” series.

Posts in this series:

In this blog post, we will configure an on-premises environment to upload CA certificates and CRLs to a primary Azure storage account, which will then be replicated to a secondary storage account and served to clients through Azure Front Door (AFD).

This process consists of three parts:

- Acquire the Access Policy connection string to authenticate against the storage account.

- Write a script that performs a file upload from the “CertData” shared folder to Azure and configure a scheduled task to run the script on a regular basis.

- Migrate clients to new CDP/AIA retrieval experience

Acquiring Storage Access Token

In this section, you will learn how to acquire an access token to use in order to upload data to a storage account using an automated approach (scheduled task). Before we start with this process, I want to recap some steps we did on the primary storage account and explain why we did this. In Part 2, I’ve added a “Configure Stored Access Policy” section and I would like to provide some background.

In Azure storage accounts, you have several options to authenticate against a storage account for write access:

- Access Keys. It is the simplest and broadest way to access a storage account. Azure storage account provides two access keys (key1 and key2), which grant full access to entire data storage, including blob, file shares, tables and queues. While it is the easiest way, it violates the least privilege principle and there is no way to constrain the access. If the key is compromised, anyone who has this key has full access to the entire storage account data until you regenerate the compromised key. Once you regenerate the key, all legitimate workloads will stop working, you will have to update the new access key in all workloads that use access keys. Obviously, this will affect too many applications. As a result, this access method is NOT recommended for use in production.

- Shared Access Signatures (SAS). SAS is a very flexible way to constrain access to Azure storage accounts. It allows very granular access constraining, not only by access level or scope, but it allows to constrain the SAS validity, and caller IP address. While SAS itself allows you to leverage the least privilege principle, it has one downside: there is no way to revoke a particular SAS token should it be compromised or access must be terminated. SAS are signed by Access Keys (see above) and are valid while SAS constraints are valid, or the access key is valid. This means that if you need to revoke the SAS, you have to regenerate the corresponding access key used to sign the SAS with all the consequences described in the previous point. As a result, this access method is NOT recommended for use in production.

- Stored Access Policies. The Stored Access Policy is an additional layer around SAS and provides a means to revoke only a subset of access tokens by removing or renaming the respective access policy. This means that you don’t need to regenerate the access key and will minimize the number of affected applications and reduce outage period and scope. Currently, it is a RECOMMENDED way to access Azure storage when Azure Entra ID authentication is not possible. We will use a stored access policy to generate delegated SAS, which then will be used in the on-premises script.

Acquire Storage Access Token Using Azure Portal

This section contains steps to generate a storage account policy-based SAS using Azure Portal.

- Log on to Azure Portal.

- Expand the left sidebar menu and press the Resource groups blade.

- In the resource group list, select the resource group where storage accounts reside.

- Select the primary storage account.

- On the left navigation menu, expand the Data storage section and click on Containers blade.

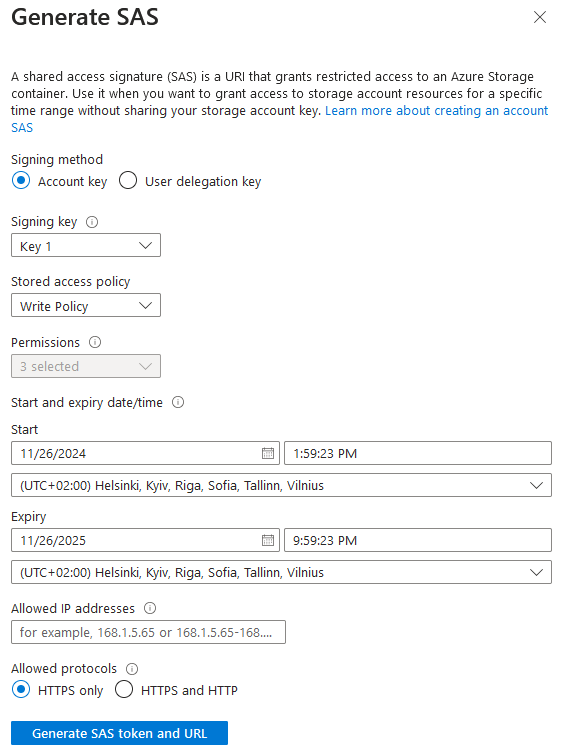

- Press the three-dot context menu on the certdata blob container and select Generate SAS menu item.

- In the Stored access policy select the policy we created during the storage account provisioning process (see Part 2 in this series).

- Optionally, configure validity for SAS token and allowed IP addresses if needed.

- Ensure that Allowed protocols are set to HTTPS only. Our storage accounts allow both, HTTP and HTTPS for read access, which is fine, we must use HTTPS for write access:

- Press the Generate SAS token and URL button.

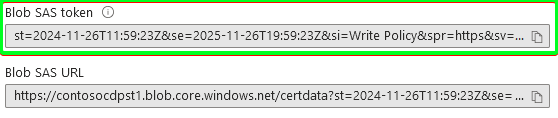

- Two new fields will appear on the Generate SAS dialog with the SAS token and SAS URL. We need only a SAS token. Save this token somewhere; we will need it later:

Remember that SAS tokens have an expiration date. Ensure to document the SAS token generation process and use a notification system that will inform you about expiring SAS token in advance to avoid PKI services outage.

Expand Your PKI Visibility

Discover why seeing is securing with revolutionary PKI monitoring and alerting.

Learn More About PKI Spotlight®Create data Upload PowerShell Script

This section provides information on creating a script which will upload CA certificates and CRLs from a on-premises shared folder to primary storage account.

With cloud services, there are many various options to upload data from on-premises servers to storage accounts. I will show one option, which is based on a script and scheduled task. You can employ serverless solutions, such as Azure Functions, Automation Accounts and other options depending on your need and requirements. However, these topics are beyond the scope of this article.

Steps in this section are performed on an on-premises machine that has network access to internal “CertData” shared folder with Read permissions and connection to internet. In addition, Az.Storage PowerShell module must be installed on this machine.

Create a PowerShell script file with the following content:

# load required .NET assemblies Add-Type -AssemblyName System.Web # ensure that PS session will use TLS 1.2 [Net.ServicePointManager]::SecurityProtocol = [Net.SecurityProtocolType]::Tls12 # define a function that will upload received from pipeline file objects to Azure storage account function Upload-PKI2Azure { [CmdletBinding()] param ( [Parameter(Mandatory = $true, ValueFromPipeline = $true, Position = 0)] [System.IO.FileInfo]$File ) begin { # initialize Azure storage account connection context with SAS token and explicitly specify HTTPS # since it is placed in 'begin' block, connection will be established only once for entire pipeline duration $StorageContext = New-AzStorageContext -StorageAccountName $PrimaryStorageName -SasToken "$SasTokenCreatedInPreviousStep" -Protocol Https } process { # retrieve MIME type for file. By default, Azure storage MIME type is "application/octet-string" which is not what we want # so we need to explicitly provide correct MIME type. $contentType = [System.Web.MimeMapping]::GetMimeMapping($file) # upload file object from pipeline to Azure storage account by providing target blob container name and MIME type # notice that we convert file names to lowercase. Set-AzStorageBlobContent -File $File -Container "certdata" -Blob $File.Name.ToLower() -Properties @{"ContentType" = $contentType} -Context $StorageContext -Force } } # read source share by filtering CRT and CRL files and pass them to upload function Get-ChildItem \\$DfsServer\$ShareName\*.cr* -File | Upload-PKI2Azure

Modify $SasTokenCreatedInPreviousStep, $PrimaryStorageName, $DfsServer and $ShareName variables to match your values.

This script contains embedded SAS token, which is somewhat sensitive. Ensure that only limited users can read this script file, or store SAS token externally in a secure way and pass it to the script as a parameter in runtime. Exact steps are organization-specific and I have no specific recommendations for this.

Run the script manually and ensure that it runs and doesn’t fail. Navigate to the primary storage account in Azure Portal and ensure that files are indeed there. Wait around five minutes and navigate to the secondary storage account and ensure that blobs are replicated from primary storage account.

Next step is to schedule the script as a scheduled task and test that it runs correctly. You can delete all blobs from the primary storage account and run the task. After the task is completed, blobs should re-appear in primary storage account. Configure scheduled task frequency to a reasonable value, for example every hour or another value of your choice.

It may be reasonable to configure the same tasks on multiple on-premises servers should primary server go down due to maintenance or failure.

Execute Preliminary Tests

At this point, we configured all the required infrastructure elements:

- PKI servers publish CA certs, CRLs and other required assets to local share on DFS server(s).

- A scheduled task uploads files from local share to primary Azure storage account.

- Primary storage account replicates blobs to secondary for better availability and lower latency.

- An Azure CDN is put in front of the storage account pool to provide a fast and reliable interface to PKI clients.

Ensure that the first three points are working as expected, i.e. CA regularly publish new CRLs, they are copied to primary storage account and then replicated to secondary storage account.

Switch PKI Clients to Leverage Azure Front Door CDN

This is the last step in our migration process and involves DNS entry re-configuration. It is the simplest task and involves only one operation: update the existing public DNS record with “CDP” name to AFD endpoint URL (with .azurefd.net host name suffix). After updating DNS record, nslookup output should be similar to this:

Name: s-part-0025.t-0009.t-msedge.net

Addresses: 2620:1ec:bdf::53

13.107.246.53

Aliases: cdp.contoso-lab.lv

Contoso-PKI-c9fggmd3dhg0f0dt.z03.azurefd.net

shed.dual-low.s-part-0025.t-0009.t-msedge.net

If you decide to retire the existing internal IIS servers, then you do the same changes on the internal DNS server. Note that DNS changes require some time to take effect.

Keep the existing IIS servers/websites that host CA assets running for some grace period or keep as a backup option.

Execute Final Tests

There is one final test left after updating DNS records: execute pkiview.msc MMC snap-in and ensure that no errors are reported.

Summary

In this four-part blog post series, we explored how to migrate certification authority on-premises CDP/AIA distribution points to cloud services that provide high availability, scalability, reliability and performance at low cost.

In first post, we explored start design, final design and cloud services requirements.

In second post, we explored redundant storage accounts setup and configuration with object replication between account.

In third post, we explored Azure Front Door CDN setup and configuration which provides HTTP interface to PKI clients.

In this last, fourth post, we explored the required configuration in on-premises network, to upload data from on-premises to cloud storage and how to switch PKI clients to cloud services.

Once again, try this approach in a test/dev environment first, make necessary adjustments, document the process and only then start production environment migration planning.

Schedule a Demo

Schedule a Demo